4 – Artificial Intelligence Act: Safe, reliable and human-centred artificial intelligence

The proposal for regulating artificial intelligence by the European Commission marks a global first, introducing a comprehensive legal structure in this domain. This Act targets both AI system providers and users, emphasising significant penalties for non-compliance — potentially up to 40 million Euros or 7% of the total annual turnover. This underscores the importance of adherence to these regulations. At the heart of the Act is a commitment to fostering trust in artificial intelligence, a cornerstone deemed essential for unlocking and maximising the vast social and economic possibilities offered by AI technologies

Lesetid 8 minutter

We are closer than ever to having the world’s first comprehensive legal framework in artificial intelligence in Europe. However, the emergence and rapid rise of different and ever more powerful technologies such as OpenAI’s GPT-4 raises new questions. European AI companies’ interests in competing with their peers in the US and China on the one hand and the European policymakers’ wishes to regulate artificial intelligence to ensure development and use of safe, reliable and human-centred technologies on the other seem to put EU’s ambitions of being a global leader at risk.

The next weeks will show whether EU will be able to resolve the contentious issues and finalise the Artificial Intelligence Act as originally planned by the end of this year or early next year.

In this article, we will touch upon the key elements of the Artificial Intelligence Act, its legislative status in the EU and conclude by looking at the way forward.

Legislative status of the AI Act

The AI Act was proposed by the European Commission in April 2021. Both the EU Parliament and Council announced their positions on the proposal and the inter-institutional negotiations, also known as trilogue, started in June. Meetings have so far been held in June, July, September and October 2023.

In the last trilogue session on 25 October 2023, the representatives from the Parliament, Council and the Commission were able to reach a consensus on some areas. However, there are still important issues, such as developing and use of foundation models and general-purpose AI. The outstanding issues will be discussed in next trilogue session on 6 December 2023.

It was originally expected that the representatives would reach full agreement on the session in December but it seems now likely that the negotiations will continue in early 2024. It is expected that it will enter into force in 2026.

As for the EEA countries including Norway, AI Act is considered to have EEA-relevance. However, Norway's current position is that it is too early to conclude on whether the proposal is acceptable or not.

The following sections of the article are based on European Commission's proposal. As the negotiations continue, the final text of the law is likely to be different than the text of the proposal. Therefore, please be aware that the information below might be subject to change.

Scope and application of the AI Act

The AI Act lays down harmonised rules for the use and provision of artificial intelligence systems ("AI systems"), and defines AI systems as software that (i) is developed with machine learning, logic- and knowledge-based and/or statistical approaches and/or with search and optimisation methods and (ii) can, for a given set of human-defined objectives, generate outputs such as content, predictions, recommendations, or decisions influencing the environments they interact with.

The proposal regulates the providers of artificial intelligence systems, and entities making use of them in a professional capacity. It does not cover use of an AI system in the course of a personal non-professional activity. The AI Act applies to:

- providers placing on the market or putting into service AI systems in the European Union, irrespective of whether those providers are established within the EU or in a third country;

- users of AI systems located within the EU; and

- providers and users of AI systems that are located in a third country, where the output produced by the system is used in the EU.

AI Act applies to all sectors, except for AI systems for military purposes.

What is the essence of the AI Act?

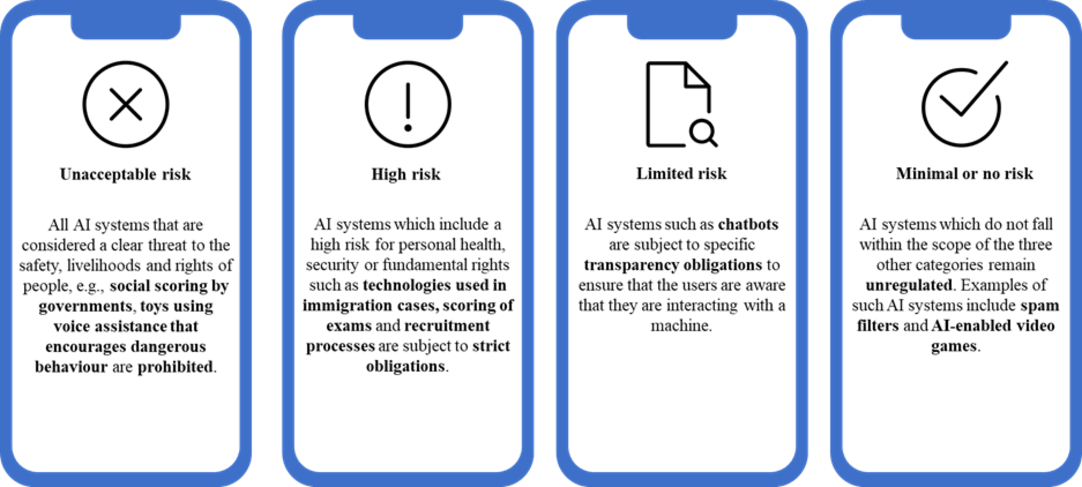

The proposal has a risk-based approach which means that the higher the risk posed by the use of AI, the more strictly its use should be regulated. The AI Act therefore categorises AI into four different levels: unacceptable risk, high risk, limited risk, and minimal or no risk.

1. Unacceptable risk: Prohibited AI systems

The AI Act expressly prohibits the provision and use of the following AI systems:

- An AI system that deploys subliminal techniques beyond a person’s consciousness in order to materially distort a person’s behaviour in a manner that causes or is likely to cause that person or another person physical or psychological harm;

- An AI system that exploits any of the vulnerabilities of a specific group of persons due to their age, physical or mental disability, in order to materially distort the behaviour of a person pertaining to that group in a manner that causes or is likely to cause that person or another person physical or psychological harm.

- AI systems used or provided by public authorities for the evaluation or classification of the trustworthiness of natural persons over a certain period of time based on their social behaviour or known or predicted personal or personality characteristics, with the social score leading to either or both of the following:

- Detrimental or unfavourable treatment of certain natural persons or whole groups thereof in social contexts which are unrelated to the contexts in which the data was originally generated or collected;

- Detrimental or unfavourable treatment of certain natural persons or whole groups thereof that is unjustified or disproportionate to their social behaviour or its gravity.

- Use of ‘real-time’ remote biometric identification systems in publicly accessible spaces for the purpose of law enforcement are prohibited, unless and in as far as such use is strictly necessary for one of the following objectives:

- The targeted search for specific potential victims of crime, including missing children;

- The prevention of a specific, substantial and imminent threat to the life or physical safety of natural persons or of a terrorist attack;

- The detection, localisation, identification or prosecution of perpetrators or suspects of certain criminal offences.

2. High-risk: Only allowed if certain criteria are fulfilled

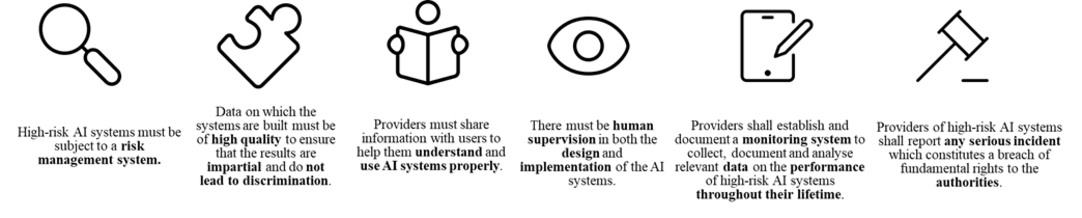

High-risk AI systems are such systems that includes a high risk for personal health, security or fundamental rights. For the high-risk AI systems, rather than providing an exhaustive list, the law sets out certain classification rules.

Accordingly, an AI system shall be considered high-risk where both of the following conditions are fulfilled:

- The AI system is intended to be used as a safety component of a product, or is itself a product that is covered by the EU legislation listed in Annex II of the AI Act. These products include but are not limited to recreational craft and personal watercraft, lifts and safety components for lifts and cableway installations.

- The product whose safety component is the AI system, or the AI system itself as a product, is required to undergo a third-party conformity assessment pursuant to the EU legislation listed in Annex II.

In addition to the AI systems fulfilling the foregoing criteria, the AI systems listed in Annex III of the AI Act are also considered high-risk. These AI systems include, among others, AI systems intended to be used for the ‘real-time’ and ‘post’ remote biometric identification of natural persons and AI systems intended to be used as safety components in the management and operation of road traffic and the supply of water, gas, heating and electricity.

According to the European Commission, other examples of high-risk AI systems include technologies used in educational or vocational training, that may determine the access to education and professional course of someone’s life (e.g. scoring of exams); employment, management of workers and access to self-employment (e.g. CV-sorting software for recruitment procedures) and migration, asylum and border control management (e.g. verification of authenticity of travel documents).

Such high-risk AI systems are only allowed if certain requirements are fulfilled. The main requirements are as follows:

3. Limited risk: Additional transparency obligations

There are additional transparency obligations for certain AI systems.

- AI systems intended to interact with natural persons should be designed and developed in such a way that natural persons are informed that they are interacting with an AI system, unless this is obvious from the circumstances and the context of use.

- Users of an emotion recognition system or a biometric categorisation system shall inform natural persons about the operation of the system.

- Users of an AI system that generates or manipulates image, audio or video content that appreciably resembles existing persons, objects, places or other entities or events and would falsely appear to a person to be authentic or truthful (‘deep fake’), shall disclose that the content has been artificially generated or manipulated.

All AI systems mentioned above are subject to the transparency obligations described in this section. However, if such an AI system is also determined to be high-risk, it will be subject to the obligations described in Section 2 above as well.

4. Minimal risk: Not subject to special requirements or obligations

It is considered that most AI systems includes minimal risks to individuals and society, and AI systems which are not covered by the foregoing rules are not subject to special obligations under the AI Act.

Consequences of non-compliance

According to European Commission's proposal, non-compliance with the provisions under the AI Act might lead to fines up to 30 000 000 EUR or up to 6 % of a company's total worldwide annual turnover for the preceding financial year. However, it is also worth noting that the European Parliament has proposed to increase the fines up to 40 000 000 EUR or up to 7% of a company's total worldwide annual turnover. The extent of the administrative fines will be determined as a result of the inter-institutional negotiations in the European Union.

The way forward

One of the most contentious issues in the trilogue sessions have been foundation models such as ChatGPT. In the October session, representatives seemed to agree on incorporating the foundation models in the tiered approach which is explained above. This would mean in practice that the foundation models will be categorised into one of the risk groups (as unacceptable risk, high risk, limited risk, or minimal or no risk) based on how powerful they are and their impact on society and the respective rules and obligations will apply to each model accordingly.

However, on a technical meeting held on 10 November 2023, representatives from France, Germany and Italy voiced their objections against any type of regulation being imposed on foundation models. The representatives seem to be lead in this direction by the AI companies in their respective countries which fear that strict EU regulations on AI technologies might put them on a disadvantaged position against their competitors in the US and China.

The recent developments put EU's ambitions of becoming a global leader in artificial intelligence, by putting in place the first comprehensive legal framework in this area, in danger. Policymakers in the US, UK and China are also becoming increasingly active in their work on AI regulation.

Our Technology and Digitalisation team is following the legislative developments relating to artificial intelligence closely and will be happy to answer your questions.